Monitor your model

Now that you’ve used the free trial tutorial of sample taxi fare model for more details.), it’s time to explore the sample notebook, monitor_placeholder_api_generic.ipynb. The notebook uses the Python client SDK to create a placeholder model, segments, and policies similar to the sample taxi fare model. Once created, the model is submitted for monitoring and to get insight into model performance and accuracy.

To monitor a model, you need to:

- Create a placeholder model deployment

- Load inference data and ground truth

- Create and run segments and policies

- Monitor the deployed model in the platform

Create a placeholder model deployment

To monitor a model deployed outside of VIANOPS, you create and deploy an internal placeholder model to operate as a reference for that model. Using the sample notebook available from VIANOPS, you can deploy a placeholder model for your model. Note that many of the tasks illustrated in the notebook can be accomplished using either the API (SDK or REST API) or UI. The sample notebook uses the VIANOPS Python client SDK to create and deploy a new placeholder model. See the VIANOPS developer documentation more information on the Python client SDK.

Explanation of the sample model and notebook

In this tutorial, a sample regression model that predicts the tip amount for taxi trips in New York City runs outside of VIANOPS. To monitor this model in the platform, the sample notebook monitor_placeholder_api_generic.ipynb creates a placeholder model named “Taxi fare model” and related project, “Taxi fare project”. Sample data for the taxi trips is used to create the placeholder model and simulate inference data for the past and future timeframes. The sample data is available in a CSV file located in the /inputs folder. Ground truth (i.e., the actual values of those predictions) is added so the platform can calculate model performance (i.e., the accuracy of its predictions give the actual data). Once the data is in place, the notebook creates segments to narrow drift analysis to specific populations in the data. Policies are configured to detect feature and performance drift across specified data and within specific time windows.

When finished, the notebook runs the configured policies. Incoming inferences are compared against baseline data to detect values outside defined thresholds and metric settings. Policies run against all specified data (varies by policy configured); in addition, policies configured with segments then run again against the data defined by the segments, resulting in multiple policy runs providing additional results to help understand your model.

Results are viewable in the policy and data segment pages in the UI. See the Explore sample taxi fare model tutorial for more details..

Create a placeholder model deployment in a notebook

-

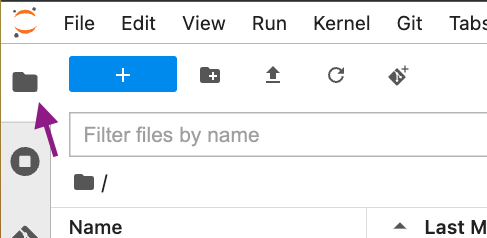

Sign in to the VIANOPS Jupyter service for your platform,

https://edahub.(your_platform_domain), using your VIANOPS login. (For example, if you access the platform athttps://monitoring.company.vianai.site/then the domain iscompany.vianai.siteand the Jupyter service ishttps://edahub.company.vianai.site).The sample notebook is available under notebooks > samples > monitor_placeholder. Here you can find the notebook (

monitor_placeholder_api_generic.ipynb) and a Python file (helper.py). The /inputs folder contains the dataset configured for the notebook. The dataset provides sample NYC Yellow Cab taxi fare data collected over a three-month period for Brooklyn and Manhattan routes. - First, you need to copy the monitor_placeholder folder structure to your home directory and create an /inputs subfolder.

-

Navigate to your home directory:

- Open a terminal window (File > New > Terminal).

- Enter the following code into the terminal:

cp -R /notebooks/samples/monitor_placeholder/ .

A new monitor_placeholder folder appears in your home directory.

-

-

From the new monitor_placeholder folder, open the notebook

monitor_placeholder_api_generic.ipynb. -

Under the section “Specify imports and set up variables”, run the first cell to add all imports needed for the notebook.

-

Run the second cell to set the cluster, account, data, and connection settings. These values are retrieved from the platform configuration and helper file. If it cannot read these values from the system variables, it prompts you to enter them.

If prompted, enter the values:

cluster—The cluster location consists of the URL domain aftermonitoringand up to but not including the forward slash. For example, if the VIANOPS URL ishttps://monitoring.name.company.sitethen the cluster location isname.company.site.usernameandpassword—For account authenticated for the target cluster.do_drift—Set toyso that the notebook supports drift data/policies.run_optional_dummy—Set toyso that policies, segments, etc. are viewable in the UI pages.connection_type—Retrieves the connection setting configured for the system. Set toCOLUMNDB(column-oriented data store).

-

Click the third cell, review the values set for this placeholder model deployment, and press Run.

-

If using the sample notebook as is, do not modify these values since they are configured specifically to support this notebook workflow.

-

If you want to extend the sample notebook with advanced monitoring, you likely need to modify one or more values. See the “Change the feature set for the model” section of the advanced monitoring tutorial for more details.

Notebook variable Value experiment_nameRequired. The sample notebook creates a regression model. Must be either regressionorclassification.project_nameRequired. Name for the placeholder project. Model artifacts are associated with the project, including the model, policies, and segments. deployment_nameRequired. Name for the placeholder deployment. model_nameRequired. Name for the placeholder model. model_versionRequired. Version of the placeholder model to deploy. model_stageRequired. Must be primary.featuresetRequired. Feature set used to train the placeholder model. allcolumnsRequired. All columns in the feature set.

By default, these are the columns in the sample notebook local data file provided in the /inputs folder. If you are using another feature set (different local file), make sure the columns listed here match the columns in your feature set. To specify different columns, see the “Change the feature set for the model” section of the advanced monitoring tutorial for more details.continuouscolumnsOptional. Columns in the feature set containing continuous data. (Typically, these are numerical columns with integer or float data types.) Use empty brackets [] if there are no continuous columns.

Make sure the columns listed here match the columns in your feature set. (By default, these are thecontinuouscolumnsin the sample notebook local data file provided in the /inputs folder. To specify different continuous columns, see the “Change the feature set for the model” section of the advanced monitoring tutorial for more details.)categoricalcolumnsOptional. Columns in the feature set containing categorical data. Use empty brackets [] if there are no categorical columns.

Make sure the columns listed here match the columns in your feature set. (By default, these are thecategoricalcolumnsin the sample notebook local data file provided in the /inputs folder. To specify different categorical columns, see the “Change the feature set for the model” section of the advanced monitoring tutorial for more details.)str_categorical_colsOptional. Categorical columns in the feature set that should be set to string format. Use empty brackets [] if there are none.

Make sure the columns listed here match the columns in your feature set. (By default, these arecategoricalcolumnsin the sample notebook local data file provided in the /inputs folder. To specify different columns, see the “Change the feature set for the model” section of the advanced monitoring tutorial for more details.)targetcolumnRequired. Name of the feature set column that the model is predicting.

Make sure this column matches a column in your feature set. (By default, this is thetargetcolumnfor the sample notebook local data file provided in the /inputs folder. To specify a different column as the target, see the advanced monitoring tutorial for extending the sample notebook with advanced monitoring.)offset_colRequired. Feature set column containing the offset value (datetime format), used for generating time/date stamps identifying when predictions were made. Offsets the given datetime to today -n number of days.

Make sure this column matches a column in your feature set. (By default, this is theoffset_colfor the sample notebook local data file provided in the /inputs folder. To specify a different column for the offset value, see the “Change the feature set for the model” section of the advanced monitoring tutorial for more details.)identifier_colRequired. Column containing the identifier feature that uniquely identifies predictions. (When the model sends predictions, the platform uses the identifier column to map the model’s ground truth data.) directoryOptional. Local directory location containing data files for the notebook. These may be JSON, CSV, Parquet, or ZIP files (where each ZIP file contains only one compressed file). This must be a subdirectory in the notebook folder. Set to inputsby default.

IIf you change the directory location for reading local files, make sure this directory is identified correctly here.

To use different data for your placeholder model, see the “Change the feature set for the model” section of the advanced monitoring tutorial tutorial.upload_methodRequired. Method for sending predictions received from model from the placeholder deployment to VIANOPS. Supported values:

•json– (default if not defined) to create raw JSON inputs and outputs to POST to inference tracking API.

•file– to cache uploaded files based on batch size and send cache key on POST to inference tracking API.batch_sizeRequired. Number of batches for splitting the prediction data (for either value of upload_method). If None, the platform reads the full prediction file.n_days_dataOptional. Number of days’ worth of data present in the notebook. This value is specific to the taxi data and used in the helper Python file. days_futureRequired. Used to offset the available data in the future. For example, if n_days_datais set to92anddays_futureis set to5, the data is offset to provide 5 days of future data and 87 days of historical data, starting from today.days_driftRequired. Used to upload data from the latest date and the days leading up to that date. For example, if days_driftis set to7anddays_futureis set to5then data drifts over the last 2 days and then the next 5 days. (i.e., the offset is from today date plusdays_future).policy_run_daysOptional. Number of previous days for running the policy. For example, if set to 14and the current date is May 15th, then the policy runs from May 2nd to May 15th (today).connectionRequired. Database location for the inference store. segment1_nameRequired. Identifies a segment that contains only data for the lower Manhattan taxi route. segment2_nameRequired. Identifies a segment that contains only data for Williamsburg to Manhattan taxi route. base_policy_monthRequired. Identifies a policy that checks for drift each month using inferences from all data from a month prior as baseline. policy1Required. Identifies a policy that checks for drift each week using defined segments and inferences from the previous week as baseline. policy2Required. Identifies a policy that checks for drift each day using defined segments and inferences from the previous day as baseline. mp_policyRequired. Identifies a policy that runs the MAE metric to calculate model accuracy and detect issues with performance. If you are creating other segments or policies (as explained the “Create multiple policies for placeholder deployment” section of the advanced monitoring tutorial), you specify the names for those objects instead of these variables.

-

-

Run the fourth cell to create the model deployment, feature, segment, and policy variables using the values you specified as inputs and variables.

Note: If you create other segments or policies (as explained in the advanced monitoring tutorial sections “Create a new segment for a policy” and “Create a new policy”), you would specify the names for those objects in both cell 3 and cell 4.

-

Run the fifth cell to create variables formatted for the UI using the input variables.

-

Run the sixth cell to create the values (

deployment_dict) that the notebook uses when sending inference tracking to the backend (i.e., the values for thehelper.pypopulate_data() method).Now you can deploy the placeholder model.

-

Under the section “Creating a placeholder deployment”, run the cell to create the placeholder deployment as a result of this method:

deploy_api.create_placeholder(placeholder_deployment_params). -

Under the section “Create a project in modelstore” run the cell to create the project as a result of this method:

project_api.create(projects_param). -

Under the section “Create a model in modelstore” run the cell to create the model as a result of this method:

model_api.create(models_param). - Run the cell in the “Optional” section to create and upload a dummy dataset as a result of this method:

dataload_api.submit(dataloading_job).

These methods create a new placeholder model deployment in VIANOPS, using the values configured in the notebook. When finished, you can find the new project and model in the UI; navigate using the project and model dropdown lists. See the Explore sample taxi fare model tutorial for more details.

Next, load inference data, predictions, and ground truth for the model and set up segments and policies. When running, the policies analyze the new inference data (created by the model) for drift and performance issues.

Load inference data and ground truth

As opposed to standard models, placeholder models are not trained for specific datasets. Instead, they operate solely as references for deployed models. To support drift and performance monitoring for the model, VIANOPS creates an inference mapping identifying the schema for the model’s inferences. Once the inference mapping is in place, the model can send inferences/predictions to VIANOPS for policy monitoring and metrics analysis.

Note: Although the platform can infer the schema, configuring it explicitly is a reliable best practice and recommended to ensure the platform can understand, save, and access the data correctly.

Want to create a custom inference mapping? When using sample notebook with the basic workflow, running this cell creates the inference mapping for the sample local dataset. When using the sample notebook and a different dataset (different local file), you can create a custom inference mapping for that data. If creating a custom inference mapping, do not run the “Load inference mapping schema” cell but instead create and run a notebook cell with a custom inference mapping. See the section “Create a custom inference mapping (optional)” in the advanced monitoring tutorial.

The following steps are for running the sample notebook without changes to inference mapping or data.

-

Run the cell “Load inference mapping schema” to create the inference mapping for the model as a result of this method:

inference_mapping_api.create_inference_mapping(inference_mapping) -

Run the cell “Send inference tracking to the backend”. This specifies the location of the inferences (directory), how to read them (batch size, upload method, etc.), and how to create future and drift data. The

helper.pymethodpopulate_data()reads inferences from the defined CSV file in the /inputs folder and uploads them to platform cache. Then, reads from cache based on the definedbatch_size. -

Next, run each of the cells in the section “Fill ground truth data for model performance”. These set up values for ground truth, then use the SDK to submit it to the backend database.

-

Finally, run the cell “Run a preprocess job for model performance”. This joins the model’s inference mapping and ground truth values for the specified start and end time values, performance tasks, and policies to calculate model accuracy and detect performance drift.

After submitting inferences/predictions and ground truth to VIANOPS, you can review the model using platform UI visualization features. See the Explore sample taxi fare model tutorial for more details.

Now you can create segments and policies to monitor model performance for the deployed model.

Create and run segments and policies

The cells under the “Segments and Policies” section create segments (or subsets of data), and policies that, when deployed, enable VIANOPS to analyze inferences to detect issues with drift and model performance. Add segments to run policies on narrow populations of interest. When configured with a segment, a policy runs on all specified features (e.g., defined as part of feature_weights) and then runs separately for each defined segment.

The names for each segment and policy are defined earlier in the notebook (in the section, “Specify imports and set up variables”). Specifically, the operations in these cells create the following segments and policies::

- Brooklyn-Manhattan segment (

segment1_namevariable) includes only data for pickup and dropoff locations in Brooklyn and Manhattan. - Williamsburg-Manhattan segment (

segment2_namevariable) includes only data for pickup and dropoff locations in Williamsburg and Manhattan. - Month-to-month drift policy (

base_policy_monthvariable) looks for feature drift in the inferences for the current month (target window) by comparing to them to the inferences from the prior month (baseline window). - Week-to-week drift w/segments (

policy1variable) looks for feature drift in the inferences for the current week (target window) by comparing to them to the inferences from the previous two weeks (baseline window). -

Day-to-day drift w/segments (

policy2variable) looks for feature drift in the inferences for the current day (target window) by comparing to them to the inferences from the prior day (baseline window).All three policies use the Population Stability Index (PSI) metric on data in three columns—

est_trip_distance,est_travel_time, and gitest_extra_amount—while the Month-to-month policy also includes the data in the columnsPULocationandDOLocation. The policies detect when drift measure exceeds .1 (warning level flag) or .3 (critical level flag). When run, the policies look for drift violations across defined columns with equal weights. Additionally, the Week-to-week and Day-to-day distance-based policies (policy2andpolicy2) look for drift violations within the two defined segments, Segment1 and Segment2. - MAE performance policy (

mp_policyvariable) looks for performance drift. Using the Mean Absolute Error (MAE) metric, it compares prediction and ground truth values for the current day (target window) by comparing to them to the data from the prior day (baseline window). If drift measure exceeds 5% a warning level flag is set; if it exceeds 15% a critical level flag is set. In addition to looking for performance drift violations across all data for the defined window, the policy also runs separately for the two defined segments (Segment1 and Segment2).

Run each of the cells in this section, one after the other. When done, the new policies and segments are visible in the VIANOPS UI, in the Segments and Policies pages for the model. See the Explore sample taxi fare model tutorial for more details.

Note: VIANOPS uses Coordinated Universal Time (UTC) format. If your local time or cloud node offset is different from UTC time, it is highly recommended that you set your platform profile in the Settings Manager (Settings > Profile & Communications) to UTC to ensure drift policy data and results have date/timestamps for the day you run them (in your local or cloud node time zone).

For example, if you are in the Pacific Time Zone (PST), your local time offset is -8:00. If you run policies late in the day, the data may have date/timestamps for the following day.

Now, run the cell “Run a preprocess job for policies”. This reads model inference mapping for the specified policy timeframes, thereby reducing policy processing time during runtime.

Run policies and model performance

At this point, you are ready to run the defined policies. You can either run the policies from the UI (from the policy page, Run Policy action) or from the notebook as explained here.

In the section “Trigger multiple runs for the policies”, click Run on the first cell and then on the second cell. The helper file method trigger_offset_runs_policies creates the necessary data and then starts each policy.

Then, run the cell in the “Submit a model performance job” section to run regression performance metrics (such as, Mean Squared Error (MSE), Root Mean Squared Error (RMSE), Mean Absolute Error (MAE), etc.) on the model data. You can view and analyze the results of performance metrics in the Model Dashboard to track the effectiveness of the deployed model. See the Explore sample taxi fare model tutorial for more details.

When you are ready to modify the notebooks to create segments and policies for your deployment, see the “Create a new segment for a policy” and “Create a new policy” sections of the Advanced monitoring tutorial.

Monitor the deployed model in the platform

To monitor your model, navigate to the deployment in the Model Dashboard and view the Latest Performance and Prediction Metrics charts. From the Policies page, review policy results as explained in the Explore sample taxi fare model tutorial.

Retrain and redeploy?

If you determine the model is drifting (and predictive performance is declining), you can retrain to get better performance and then redeploy the model to send new predictions.