Metrics

VIANOPS supports the following metrics:

- Performance metrics - classification models

- Performance metrics - regression models

- Drift metrics

- Data profiling metrics

- Custom metrics

All metrics are supported through the API. A subset of metrics are supported through the UI.

Performance metrics - classification models

VIANOPS supports the following performance metrics for classification models. If accessing in the UI (e.g., when creating a performance drift policy or visualizing model performance in the Model Dashboard), see more details for each metric.

For more on using these with an API, see the API documentation for performance drift policies. When calling the endpoint make sure to specify the API Value exactly as shown.

| Metric | Description | In UI | In API | API Value |

|---|---|---|---|---|

| Accuracy | Fraction of correct predictions. | Yes | Yes | accuracy |

| Area under the curve (AUC) | The area under the ROC curve, which quantifies the overall performance of a binary classifier compared to a random classifier | Yes | Yes | rocauc |

| Balanced accuracy | Average of recall obtained on each class. | Yes | Yes | balanced_accuracy |

| Bookmaker informedness (BM) | Sum of sensitivity and specificity minus 1. | Yes | bm |

|

| Diagnostic Odds Ratio (DOR) | Ratio of the odds of the test being positive if the subject has a disease relative to the odds of the test being positive if the subject does not have the disease. | Yes | dor |

|

| F1 Score (F1) | Harmonic mean of precision and recall. | Yes | Yes | f1 |

| False Discovery Rate (FDR) | Fraction of incorrect predictions in the predicted positive instances. | Yes | fdr |

|

| Fowlkes-Mallows index (FM) | Geometric mean of precision and recall. | Yes | fm |

|

| False Negatives (FN) | Incorrectly predicted negatives. | Yes | Yes | fn |

| False Negative Rate (FNR) | Fraction of positives incorrectly identified as negative. | Yes | fnr |

|

| False Omission Rate (FOR) | Fraction of incorrect predictions in the predicted negative instances. | Yes | for |

|

| False Positives (FP) | Incorrectly predicted positives. | Yes | Yes | fp |

| False Positive Rate (FPR) | Fraction of negatives incorrectly identified as positive. | Yes | fpr |

|

| Gini coefficient | A measure of inequality or impurity in a set of values, often used in decision trees. | Yes | Yes | modelgini |

| Lift | A measure of the effectiveness of a predictive model calculated as the ratio between the results obtained with and without the predictive model. | Yes | Yes | lift |

| Log loss | A performance metric for classification where the model prediction is a probability value between 0 and 1. Measures divergence of model output probability from actual value. Logloss = 0 is a perfect classifier. | Yes | Yes | logloss |

| Matthews Correlation Coefficient (MCC) | (TPTN - FPFN)/(sqrt((TP + FP)(TP + FN)(TN + FP)(TN + FN))). -1 to 1 range (1 is a perfect binary classifier, 0 is random, -1 is everything wrong). | Yes | mcc |

|

| Markedness (MK) | Sum of positive and negative predictive values minus 1. | Yes | mk |

|

| Negative Likelihood Rate (NLR) | Ratio of false negative rate to true negative rate. | Yes | nlr |

|

| Negative Predictive Value (NPV) | Fraction of identified negatives that are correct. | Yes | npv |

|

| Positive Likelihood Ratio (PLR) | Ratio of true positive rate to false positive rate. | Yes | plr |

|

| Precision | Fraction of identified positives that are correct. | Yes | precision |

|

| Probability calibration curve | A plot that compares the predicted probabilities of a model to the actual outcome frequencies, used to understand if a model’s probabilities can be taken at face value. | Yes | prob_calib |

|

| Prevalence Threshold (PT) | Point where the positive predictive value equals the negative predictive value. | Yes | pt |

|

| Recall | Sensitivity, True Positive Rate. Fraction of positives correctly identified. (Higher Recall minimizes False Negatives) | Yes | Yes | recall |

| Rate of Negative Predictions (RNP) | This term is not standard in machine learning. It could refer to a combined metric of recall and negative predictive value. | Yes | Yes | rnp |

| Receiver Operating Characteristics (ROC) | A plot that illustrates the diagnostic ability (TPR v FPR) of a binary classifier as its decision threshold is varied. | Yes | auc_score |

|

| Specificity | Fraction of negatives correctly identified. | Yes | specificity |

|

| True Negatives (TN) | Correctly predicted negatives. | Yes | Yes | tn |

| True Positives (TP) | Correctly predicted positives. | Yes | Yes | tp |

| Threat score (TS) | TP/(TP + FN + FP) Also known as the Critical Success Index. | Yes | ts |

Additional classification metrics available from the API

Several metrics of classification models are calculated using averaging techniques and accessible via the API. (See the API documentation for performance drift policies.)

| Metric | Description | API Value |

|---|---|---|

| F1, Micro-Averaged | Global average F-1 using total TP/TN/FP/FN. Better for imbalanced data. | micro[f1] |

| F1, Macro-Averaged | Average of per-class F-1 scores without regard to class size. | macro[f1] |

| F1, Weighted-Averaged | Average of per class F-1 scores weighted by support per class. | weighted[f1] |

| Precision, Micro-Averaged | Global average Precision using total TP/TN/FP/FN. Better for imbalanced data. Equal to F-1/Precision/Accuracy. | micro[precision] |

| Precision, Macro-Averaged | Average of per-class Precision scores without regard to class size. | macro[precision] |

| Precision, Weighted-Averaged | Average of per class Precision scores weighted by support per class. | weighted[precision] |

| Recall, Micro-Averaged | Global average Recall using total TP/TN/FP/FN. Better for imbalanced data. Equal to F-1/Precision/Accuracy. | micro[recall] |

| Recall, Macro-Averaged | Average of per-class Recall scores without regard to class size. | macro[recall] |

| Recall, Weighted-Averaged | Average of per class Recall scores weighted by support per class. | weighted[recall] |

Performance metrics - regression models

VIANOPS supports the following performance metrics for regression models. If accessing in the UI (e.g., when creating a performance drift policy or visualizing model performance in the Model Dashboard), see more details for each metric.

For more on using these with an API, see the API documentation for API - Performance drift. Value must be typed in the API exactly as shown.

| Metric | Description | In UI | In API | API Value |

|---|---|---|---|---|

| Mean Absolute Error (MAE) | Average of absolute prediction errors. (Error = predicted value - actual value in all cases) | Yes | Yes | mae |

| Mean Absolute Percentage Error (MAPE) | Average of absolute percentage prediction errors abs((pred value - actual)/actual). | Yes | Yes | mape |

| Mean Squared Error (MSE) | Average of squared prediction errors. | Yes | Yes | mse |

| Negative Mean Squared Error (NMSE) | Negative of MSE. | Yes | Yes | mse |

| Negative Root Mean Squared Error (NRMSE) | Negative of RMSE. | Yes | Yes | rmse |

| Negative Mean Absolute Error (NMAE) | Negative of MAE. | Yes | Yes | mae |

| Negative Mean Absolute Percentage Error (NMAPE) | Negative of MAPE. | Yes | Yes | mape |

| R-Squared (R2) | The proportion of the variance in the dependent variable that is predictable from the independent variable(s). | Yes | Yes | r2_score |

| Root Mean Squared Error (RMSE) | Square root of MSE. | Yes | Yes | rmse |

Drift metrics

VIANOPS supports the following drift metrics.

For more on using these with an API, see API - Performance drift.

| Metric | Description | In UI | In API |

|---|---|---|---|

| Jensen-Shannon Divergence for prediction drift | Square root of the J-S divergence, which measures the average divergence of baseline and target from the mean of the two distributions. | Yes | Yes |

| Population Stability Index (PSI) for prediction drift | Sum of (baseline frequency - target frequency) x log (baseline/target) across all defined bins. | Yes | Yes |

| Jensen-Shannon Divergence for feature drift | Square root of the J-S divergence, which measures the average divergence of baseline and target from the mean of the two distributions | Yes | Yes |

| Population Stability Index (PSI) for feature drift | Sum of (baseline frequency - target frequency) x log (baseline/target) across all defined bins. | Yes | Yes |

Data profiling metrics

VIANOPS supports Numerical and Categorical data profiling metrics.

Numerical data profiling metrics

| Metric | Description | In UI | In API |

|---|---|---|---|

| Count | Total number of observations or records. | Yes | Yes |

| Min/Mean/Max | The smallest, average, and largest values respectively. | Yes | Yes |

| 1%/99% | The 1st and 99th percentiles of the data respectively (values below which a certain percent of observations fall). | Yes | Yes |

| 5%/95% | The 5th and 95th percentiles of the data respectively. | Yes | Yes |

| 10%/90% | The 10th and 90th percentiles of the data respectively. | Yes | Yes |

| 25%/50%/75% | The 25th (1st quartile or Q1), 50th (median or Q2), and 75th (3rd quartile or Q3) percentiles of the data respectively. | Yes | Yes |

| Mean-Std/Mean/Mean+Std | The mean value minus one standard deviation, the mean value, and the mean value plus one standard deviation respectively. This provides a sense of the spread of the data around the mean. | Yes | Yes |

Categorical data profiling metrics

VIANOPS supports the following categorical metrics.

| Metric | Description | In UI | In API |

|---|---|---|---|

| Count | Total count. | Yes | Yes |

| Unique values | Number of unique values. | Yes | Yes |

| Value counts | Dictionary of {value: value_count}. | Yes | Yes |

Custom metrics

You can define custom metrics based on the standard performance metrics listed above, for both regression and classification models.

- Define the custom metric with either the /v1/model-metrics REST API or the vianops_client.models.riskstore.model-metrics SDK endpoint. (To access REST API docs, see APIs.)

- Define the metric with a python function or a py_statement.

- Define your custom metric based on any of the VIANOPS standard metrics or datasources:

- Standard metrics

- To view datasources you can use to create custom metrics, use the following APIs:

- REST API — /v1/performance/model-metrics.

- SDK endpoint — vianops_client.models.riskstore.performance.model-metrics SDK endpoint.

- Custom metrics appear in italics in all the UI elements where standard metrics appear.

-

The following is an example of the body for a model-metrics endpoint defining a custom metric with language set to

py_statement:[ { "metric_name": "relative_squared_error3", "description": "Compare RMSE to MAE to determine the distribution of errors.", "language": "py_statement", "metric_type": "model_performance", "experiment_type": "regression", "definition": "rmse / mae", "abbreviation": "RSE", "alt_names": ["rmm"], "full_name": "Relative Squared Error", "status": "active", "metric_category": "custom", "metric_tags": { "for_display": true, "for_custom_definition": true, "is_metric_of_interest": true, "is_percent": false, "lower_is_better": false, "needs_predict_proba": false, "needs_class_of_interest": false } } ] -

The following is an example of notebook code to define a custom metric with language set to

python:load_api = ModelMetricsV1API() model = V1ModelMetricsModel( metric_name=custom_metrics[2], description="topXauc refers to the area under the ROC curve when only the top 'X' predicted probabilities are considered for evaluation.", full_name="topXAUC", abbreviation="topX AUC", language="python", metric_type="model_performance", experiment_type="binary_classification", definition = { "method": "calculateTopXauc", "init_params": {"filtered_df": "drifter_df", "top_x": 10}, "module": "custom", "classname": "" }, ) models=V1ModelMetricsModelList(__root__=[]) models.__root__.append(model) create_res = load_api.create(models) print(create_res)This example assumes the following:

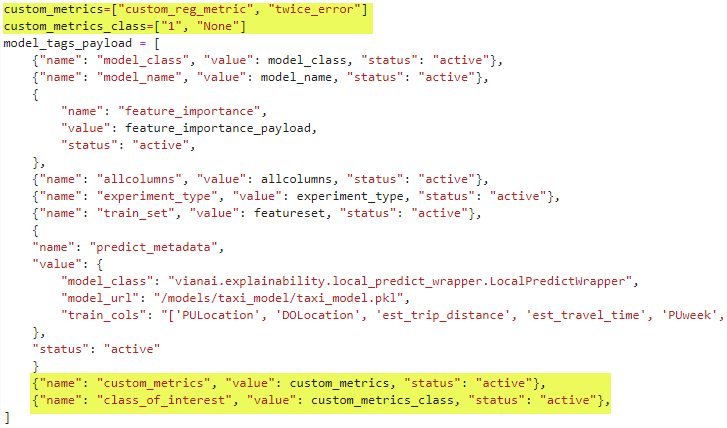

custom_metricshas been defined previously as a list of at least three items representing the name for each custom metric. In this example, the third item in the list is the name of this custom metric.definition.methodcallscalculateTopXauc, which is defined in the file/source/custom.py.module.custompoints to the file/source/custom.py.

- Add the following tags to the

model_tags_payloadsection of your notebook.

-

custom_metrics

{"name": "custom_metrics", "value": custom_metrics, "status": "active"}This object assumes you have assigned the variable

custom_metricspreviously in the notebook, as shown in the example below. This example assumes you have created custom metrics named “custom_reg_metric” and “twice_error” with the custom metric endpoint. -

class_of_interest

{"name": "class_of_interest", "value": custom_metrics_class, "status": "active"}This object assumes you have assigned the variable

custom_metrics_classpreviously in the notebook as shown in the example below. You only need to provide this tag for binary classification models. For binary classification models, the classes of interest can be 0 or 1, and if neither then you must assign to “None”.